All published articles of this journal are available on ScienceDirect.

Exploring User Adoption and Experience of Automated Machine Learning Platforms with a Focus on Learning Curves, Usability, and Design Considerations

Abstract

Introduction

Human daily activities and businesses generate a significant volume of data, which is expected to be transformed for the benefit of both businesses and humanity. Organisations utilise machine learning platforms to make informed decisions based on well-gleaned insights from their real-time data. The process of learning machine learning is challenging, making it difficult for employees to learn quickly and efficiently. Meanwhile, the introduction of automated machine learning (AutoML) has simplified this process. However, it is essential to understand how users adopt and implement the AutoML platform to address their real-world problems.

Methods

To achieve this, we conducted a quantitative study with 38 users focusing on four key areas: (1) the learning curve in ML and AutoML environments, (2) the design and usability strengths and weaknesses of AutoML platforms, (3) disparities in user experience between novices and professionals, and (4) design factors to enhance usability.

Result

Our findings revealed that users, particularly those with limited programming experience, have high expectations for the usability of AutoML; however, they also exhibit low awareness and adoption rates in the African context.

Discussion

The study illuminates gender disparities in technology adoption and identifies critical usability concerns, including the need for improved interpretability, feature engineering modules, and code integration for learning purposes. Additionally, we provide empirical evidence demonstrating AutoML’s advantages regarding training time and reproducibility compared to traditional machine learning tools.

Conclusion

This work offers novel insights into human-centered AutoML design, emphasizing inclusivity, explainability, and user-friendly interfaces. By addressing regional and gender-specific challenges, we propose actionable recommendations to democratize ML and enhance AutoML platforms. Future research should expand upon these findings by engaging frequent AutoML users to further refine usability and satisfaction metrics.

1. INTRODUCTION

In today's world, every aspect of human life and business generates high volumes of data daily, and organisations must make sound decisions without human intervention, doing so as quickly and accurately as possible [1]. Most industries that handle large amounts of data have recognised the value of machine learning technology in deriving insights from their real-time data, making them more productive, efficient, and competitively relevant [2]. Machine Learning (ML) is an offshoot of the fields of Computer Science and Artificial Intelligence that focuses on using data, algorithms, and statistical techniques to imitate human reasoning. It mirrors how humans learn and solve complex tasks.

Machine Learning (ML), an integral aspect of Artificial Intelligence, focuses on utilizing data, algorithms, and statistical techniques to mimic human reasoning. It imitates how humans learn and solve complex tasks. Recent advancements demonstrate the usefulness and relevance of ML, extending beyond traditional domains like healthcare and finance to emerging applications such as energy harvesting and human-computer interaction. For instance, hexagonal boron nitride (h-BN) composite film-based triboelectric nanogenerators (TENGs) utilize machine learning (ML) for handwriting recognition, demonstrating how lightweight, self-powered systems can integrate intelligent data processing [3]. Such innovations not only highlight ML’s transformative possibilities but also reveal the need for accessible tools, like AutoML, to democratize development for non-experts.

The use of machine learning algorithms for an application or task often requires coding skills or experience. Machine learning is a major and critical field expected to drive the 4th Industrial Revolution. Its need and demand are high and span diverse fields. As a result, many individuals, both technical and non-technical, are eager to learn machine learning. A significant obstacle for many newcomers and non-technical individuals in acquiring machine learning skills is the difficulty presented by the existing programming languages used for ML and their integrated development environments [3]. Meanwhile, the core of user experience is usability and productivity, not just the positive emotions derived from the use of a tool or product [4]. Recently, more visual and easier-to-use platforms such as Google AutoML, Azure AutoML, AutoKeras, AutoScikit, and BigML, among others, have been developed to assist ML enthusiasts, newcomers, and even professionals in performing various ML tasks [5].

Automated Machine Learning (AutoML) makes machine learning accessible to those who are not machine learning experts by automating the application of machine learning models to real-world problems [6, 7]. This accessibility is crucial because the scope of ML has expanded beyond computing and is now widely utilized in various fields, including medical sciences, business, environmental sciences, chemical sciences, natural sciences, agriculture, and the arts [8, 9]. ML has evolved into a multidisciplinary process. The applications of machine learning are endless, encompassing a wide range of sectors such as healthcare, transportation, agriculture, marketing, manufacturing, financial services, government, and cybersecurity [10-13].

To put this study in perspective, we hypothesize that:

- AutoML platforms significantly reduce the learning curve for machine learning tasks compared to traditional programming-based ML tools, especially for users with limited or no coding experience.

- Design factors greatly influence the usability and adoption of AutoML.

- Contextual barriers limit the effectiveness of AutoML in real-world applications.

This study tests these hypotheses through a quantitative evaluation of user experiences, learning curves, and computational performance metrics. We specifically focused on evaluating the usability and learning challenges associated with AutoML platforms. While general machine learning (ML) usability challenges are recognized, the primary emphasis is on AutoML systems, where key capabilities such as model selection, hyperparameter tuning, feature engineering, and pipeline optimization are automated.

1.1. Problem Statement

In today’s data-driven world, daily human activities and businesses generate vast amounts of data that organizations must transform into valuable insights for better decision-making. Machine learning (ML) platforms are widely used for this purpose; however, the complexity of learning ML presents significant challenges for employees, making it difficult for them to quickly and easily grasp the concepts. The introduction of Automated Machine Learning (AutoML) has the potential to ease this learning curve by automating many of the more complex aspects of traditional ML processes. Nonetheless, there is limited understanding of how users, especially those new to machine learning, accept and implement AutoML in solving real-world problems.

1.2. Research Aim and Questions

This study aims to explore user experience with AutoML platforms by focusing on four key areas. First, it examines the learning curve associated with machine learning, evaluating how users' performance aligns with the time taken to complete ML tasks. Second, it investigates the strengths and weaknesses of ML and AutoML concerning design and usability. Third, it identifies gaps in user experience between newcomers to the ML domain and experienced professionals. Lastly, it seeks to determine which design factors could enhance the overall user experience with AutoML platforms.

The research questions are as follows:

Are there gaps in using generic ML environments such as the Python SDK and Matlab?

What strengths exist in the design and usability of platforms such as AutoML (e.g., Google AutoML, Edge Impulse), which have been recently developed to facilitate the learning and application of machine learning?

What weaknesses exist that can be improved further?

To address the research question (i), we engaged users experienced in using generic machine learning environments like Python. Those involved in answering research questions (ii) and (iii) were restricted to individuals with experience in AutoML.

2. LITERATURE REVIEW

2.1. The AI-HCI Concept

Artificial Intelligence (AI) is a field of computer science focused on developing machines that are intelligent and capable of performing complex tasks typically requiring human intelligence [14, 15]. AI is designed to mimic human reasoning, intuition, and discernment.

Human-Computer Interaction (HCI) is a field of study that focuses on optimizing how users interact with computing devices and applications by designing interactive interfaces that meet users' needs and provide a memorable experience [16]. It is a multidisciplinary area within computer science that is crucial for the success of companies that develop software.

Artificial Intelligence (AI) is a field of Computer Science that focuses on developing intelligent machines capable of performing complex tasks that traditionally required human intelligence [17, 18]. AI aims to emulate human reasoning, intuition, and discernment. Human-Computer Interaction (HCI) is a multidisciplinary field that optimizes the interaction between users and computing devices or applications by designing interactive interfaces that meet user needs and deliver a positive user experience. It plays a crucial role in the success of software companies [18].

According to [19], AI and HCI are two computing fields united by a common focus. AI systems, whether software or hardware, are designed for human use. Therefore, establishing a strong relationship between AI and HCI is essential. When developing AI applications, it is critical to pay attention to human-computer interaction, including usability and user experience, in order to design AI applications that align with specific user needs. This user-oriented approach to AI is known as the user-oriented approach. The increasing use of AI technologies in user-oriented applications has emphasized the need to study the relationship between these two fields [20].

2.2. The Concept of Machine Learning

Machine learning is the process of using mathematical models of data and statistical learning theory to help a computer learn, continue learning, and improve based on experience. In addition to employing mathematical models and statistical theory, it is also essential for machine learning experts to be proficient in programming languages such as Python and R to tackle many challenging tasks across various domains. It is noteworthy that building a machine learning solution still requires substantial resources as well as human expertise.

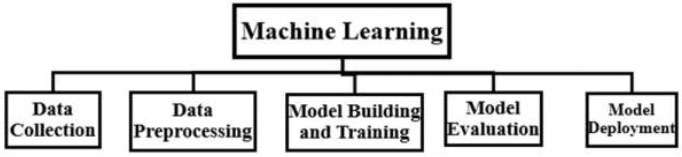

To build an ML model, the process must go through the following steps:

Data Collection

.Data Preprocessing

.Model Building and Training

.Model Evaluation

.Model Deployment

These steps require human experts to navigate through with their programming proficiency, as seen in Fig. (1).

Steps in Machine Learning Model Building [21].

As organizations become more involved in predictive analytics and the development of AI applications for insights and sound decision-making than ever before, it has become imperative for both technical and non-technical professionals to understand machine learning. However, the use of ML algorithms for a task often requires coding skills. Since acquiring this skill necessitates substantial resources for learning, automated machine learning (AutoML) was developed to alleviate the challenges faced by non-experts in utilizing ML to enhance productivity [22].

2.3. Automated Machine Learning (AutoML)

Automated machine learning (AutoML) is the process of automating the selection, parameterization, and composition of machine learning models. These models are then applied to real-world problems using automation. According to [23], AutoML is developed to address the challenge of automatically finding the pipeline (i.e. pre-processing and learning algorithms and the hyperparameters) with the best generalization performance.

What AutoML does is automate the tasks involved in training machine learning models. Some hand-coded tasks that human ML experts must perform include data cleaning, preprocessing, feature selection, hyperparameter tuning, and model building. These tedious, time-consuming, and repetitive tasks can be completed more quickly, and can explore new approaches during modeling with the help of AutoML. It automates all manual tuning and modeling performed by human experts to find optimal hyperparameters and models.

AutoML tools and platforms differ in their features, complexity, and level of automation. Some platforms offer a user-friendly interface or graphical user interface (GUI) that enables users to specify their requirements and constraints. Others provide programmatic APIs or command-line interfaces (CLIs) for more advanced users to interact with the AutoML system. Furthermore, some AutoML solutions utilize cloud computing resources to manage large-scale data and computationally intensive tasks.

The goal of AutoML is to democratize machine learning by automating repetitive tasks, reducing the need for extensive domain expertise, and enabling users to focus more on problem formulation and the interpretation of results. However, it is important to note that AutoML is not a one-size-fits-all solution and may not always outperform expert-designed machine learning pipelines. It should be viewed as a tool that complements human expertise and facilitates faster iteration and experimentation in the machine-learning workflow.

AutoML systems typically utilize a combination of machine learning algorithms, optimization techniques, and search strategies to automate the various tasks involved in model building. They are designed to learn from historical data to enhance the accuracy and speed of the model-building process. There are two main types of AutoML:

Fully Automated Machine Learning: In this approach, the entire model-building process is automated, encompassing data preparation, feature engineering, algorithm selection, hyperparameter tuning, and model deployment [24]. The user simply provides the input data and specifies the problem to be solved, while the system handles the rest.

Semi-Automated Machine Learning: In this approach, the user retains some control over the model-building process. The system automates certain tasks, such as feature engineering and hyperparameter tuning, while allowing the user to specify the algorithm to be used and manually adjust certain parameters.

2.4. Human-centred AutoML

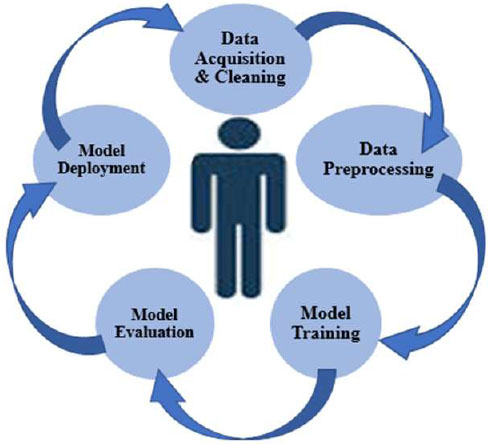

While the HCI community has proposed guidelines and strategies for designing interactive user interfaces for AI applications for over two decades, the implementation of these guidelines has gained greater emphasis in recent times [25]. A key approach in interaction design is user-centered design. User-centered design involves gaining insights into users' experiences through usability testing. A tool, product, or software is considered user-centered if its design phase revolves around achieving a deep understanding of the product's users and their motivations for using it. When the real issues faced by users are understood and addressed before creating a product for them, it can be regarded as user-centered. As highlighted in the blog post titled “Rethinking AutoML: Advancing from a Machine-Centered to Human-Centered Paradigm” by [26], the initial AutoML approaches did not adequately consider the diverse categories of users (see Fig. (2).

Human-centered AutoML.

As shown in Fig. (2), a user-centered AutoML is tailored to the needs and established workflows of users (which, in this case, are practitioners), thus supporting them in their daily business operations in the most effective and efficient way. Some of the AutoML solutions include Auto-Sklearn, Google Cloud AutoML, H2O AutoML, AutoKeras, and Amazon Lex.

2.5. Gaps in the Usage of AutoML

Existing studies on AutoML have identified several limitations and drawbacks associated with its usage. One significant drawback is that AutoML is often viewed as a black-box solution, making it challenging for users to understand how the models arrive at their predictions. Another limitation is the lack of intermediate results provided to users, which hinders their ability to evaluate the system effectively. Additionally, AutoML solutions typically do not facilitate human-in-the-loop interactions, further restricting user control and involvement in the model development process. Addressing these limitations would contribute to democratizing machine learning, increasing accessibility, and promoting innovation and business efficiency.

3. METHODOLOGY

To understand users’ experiences with Generic ML environments and AutoML environments from the perspectives of newcomers and seasoned users, we conducted a user study involving ML practitioners —individuals who manage the business side of machine learning and engage with stakeholders about the possibilities and benefits of pursuing goal-driven ML tasks for social good—ML enthusiasts—people passionate about machine learning and its results; these categories of users may not necessarily be experts or professionals in ML, as well as newcomers to ML [26].

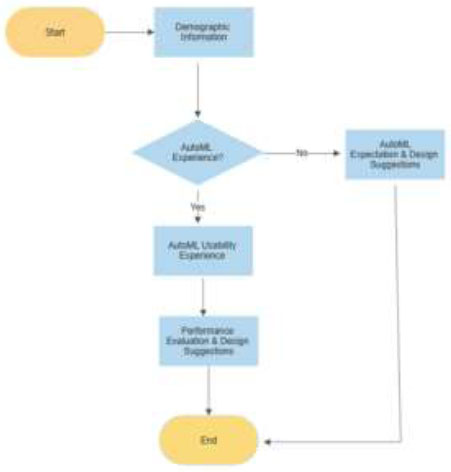

This study employs a quantitative approach using questionnaires. In designing the questionnaire, we adopted the system's usability scale [27] and ISO Usability metrics [28] to measure users' experience. The questionnaire consists of five sections. The first section focuses on demographic information, experience, and the learning curve in acquiring ML skills using a generic environment such as Python. By learning curve, we refer to how quickly and effectively learners can acquire ML skills or programming. Questions include: How was the learning curve for you? Do you think a simpler or automated coding/ML platform could have eased the learning process? After section 1, participants with no experience in the AutoML environment were asked to state their expectations and design suggestions for how AutoML can ease the learning curve of acquiring machine learning skills. For participants with AutoML experience, sections 3 and 4 concentrate on understanding their usability experience and performance evaluation of AutoML. Participants were asked to conduct the evaluation based on the various AutoML platforms they have explored. The most notable ones include RapidMiner and BigML, while others worth mentioning are Edge Impulse, AzureML, and PyCaret. Finally, they provided suggestions on how AutoML can be further improved based on their experiences. A summary of the questionnaire structure and prompts is shown in Fig. (3).

Structure and Prompt of Questionnaire.

3.1. Recruitment and Data Collection

Recruitment was conducted by sending messages across various social media groups and communities of HCI and AI professionals. These communities include a university in southwest Nigeria, the AI Saturday Club, the Data Scientists Network, and Women in AI. In addition, a snowball approach was adopted for participant recruitment. Participation was voluntary, and ethical clearance was obtained from the appropriate institution in Nigeria. The recruitment process lasted for nine months (September 2022 - April 2023). Finding users in Nigeria with experience in using AutoML proved challenging. Consequently, the recruitment and study took longer than expected.

3.2. Data Analysis

During the initial phase of the study, 38 participants with experience in the conventional ML platform environment were involved. Subsequently, the second phase focused on examining user experience within the AutoML environment. Out of the initial 38 participants, 16 individuals had prior exposure to AutoML and actively participated in the usability evaluation process. Nielsen's guideline (1994), suggesting a minimum of 3-5 users for a usability study, was taken into consideration, affirming the appropriateness of proceeding with the 16 selected participants to identify and address usability concerns specific to AutoML.

In the first phase of the study, which aimed to investigate user experience in a generic environment, a total of 38 participants took part. Among the participants, 29.95% were female and 71.05% were male. Regarding ML experience, 55.3% had 1-2 years, 39.5% had 3-4 years, and 5.3% had 5-6 years. Categorizing the users, 39.5% identified as ML Experts, 36.8% as ML Enthusiasts, 18.4% as Newbies, and 5.3% fell under the category of “other.” Moving on to the second phase of the study, which focused specifically on user experience with AutoML, a total of 16 participants took part. Among these participants, 12.5% were female and 87.5% were male. Regarding ML experience, 37.5% had 1-2 years, 56.25% had 3-4 years, and 6.25% had 5-6 years.

3.3. Case Study of Pre-Training

The “TinyML4D” initiative is a case of a formal pre-training model that aims to democratize embedded machine learning (ML) training in scarce settings. Recognizing access challenges such as hardware, software, and cross-disciplinary human resource availability as hindrances, the project offers open-source, modular curricula and experiential workshops that are collaboratively co-designed by international educators. The fundamental resources serve as pre-training, giving students the programming, electronics, and ML skills needed before advancing to advanced applications [29].

Through creating a worldwide community of learning institutions and providing localised content, TinyML4D diminishes inequalities in education and promotes inclusivity for the ML field. The case shows the worth of pre-training in creating capacity and enabling students in developing nations to engage with cutting-edge technologies [29].

4. RESULTS AND DISCUSSION

4.1. Phase 1: Exploratory Analysis

In this section, we discuss insights gained from analyzing demographic information in relation to their expectations of autoML or general ML experience. Three key factors emerged prominently from this exploratory analysis, which are discussed as follows:

4.1.1. Learning Curve

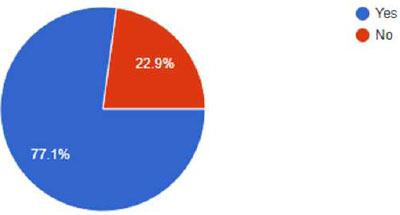

One notable observation was that individuals with prior programming experience found it easier to learn machine learning compared to those without such experience, who faced difficulties in grasping the concepts. Fig. (4) illustrates the results, indicating that the introduction of AutoML could potentially alleviate the learning curve associated with machine learning. Of the participants, 27 individuals (77.1%) agreed that AutoML could facilitate the learning process, while 8 participants (22.9%) expressed disagreement. This highlights the high expectations placed on AutoML regarding its usability, according to the respondents.

Percent of those who agree that an introduction to AutoML would facilitate learning about AutoML based on the programming experience of participants.

4.1.2. Apathy Issues

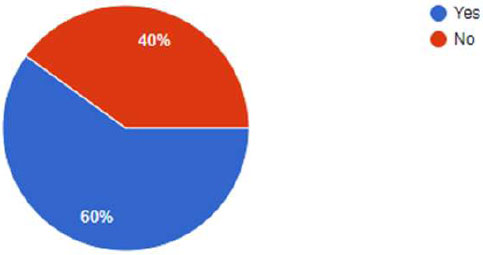

AutoML, which aims to address the AI skills gap, has encountered a lack of enthusiasm among university students in Nigeria, particularly those attending universities in the Southwestern region. Our study uncovered a low level of experience in AutoML among the respondents, largely due to a lack of awareness. Fig. (5) highlights this issue, with 60% of the participants indicating that they are hearing about AutoML for the first time.

Awareness Rate of AutoML: Percent of participants hearing about AutoML for the first time.

4.1.3. Expectations from AutoML

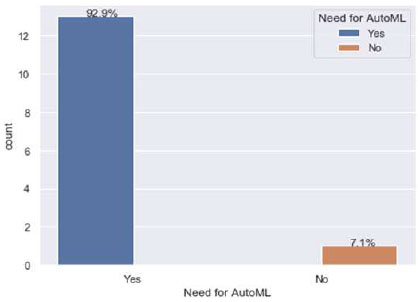

We conducted further analysis on participants who had no prior experience using AutoML. We aimed to understand their willingness to use AutoML if given access or exposure, as well as their expectations and design suggestions for the platform. The results, shown in Fig. (5), indicate that 92.9% of the participants have positive expectations from using an AutoML tool to enhance their performance in machine learning projects. This high positive response rate can be attributed to the potential for increased productivity and reduced reliance on human intervention in machine learning projects, as highlighted by [30].

When asked about design suggestions, the participants emphasized the importance of simplicity and ease of understanding and interpreting results. They suggested considering the assessment quality of unsupervised learning results and incorporating an interface for data uploading. Additionally, they highlighted the need for the output of trained data to be presented in a way that even machine learning newbies can comprehend without extensive assistance from ML professionals. Furthermore, an analysis of the participants' programming language preferences revealed that 76.32% of them started their programming journey with Python. Based on this, we recommend that AutoML platforms be designed to generate Python code. This would enable newbies to view and learn from the underlying code behind their AutoML projects, further enhancing their understanding and learning experience.

Distribution of Respondents’ Perception on the Need for Automated Machine Learning (AutoML).

4.2. Phase 2: Exploratory Analysis

In this phase, we focused on participants who had prior experience with AutoML to gather insights into their usability experience. For those without experience in AutoML, we organized a 3-day workshop dedicated to the subject. The results and findings from that workshop will be published in a future article. In this section, we will discuss the insights gained from analyzing the demographic information regarding the usability experience of those participants with prior AutoML experience.

4.2.1. Gender Issues in Adoption of AutoML

Our study revealed a significant gender disparity among the respondents, with males accounting for 87.5% and females comprising only 12.5%. Despite distributing and administering questionnaires to both genders, this outcome reflects a consistent trend of low technology adoption by females, which aligns with concerns raised by [31]. The underrepresentation of females in automated environments, where they make up less than 11%, puts them at a higher risk of job loss due to automation. Therefore, developers and usability testers must prioritize gender-friendly and inclusive programming environments that cater to individuals from diverse backgrounds. Considering gender diversity in the design and development of AutoML platforms, as emphasized by [32, 33], becomes highly imperative in addressing this disparity.

4.2.2. Rigidity

Our findings also revealed that many respondents who identified themselves as programming experts do not utilize AutoML. Instead, they prefer the traditional approach of running and evaluating their machine learning models. This sentiment aligns with concerns raised by [34], who highlight that experts perceive AutoML as too restrictive, as it only handles a small portion of the end-to-end process. According to Naik, the most impactful task in machine learning—feature engineering—is domain-specific and cannot be fully automated. Consequently, AutoML is seen as a job generator that facilitates the execution of a series of experiments based on a predefined framework.

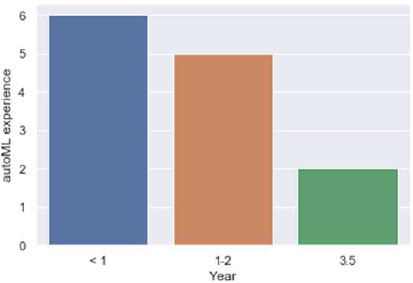

4.3. Usability Issues of AutoML

Usability is a measure of how well a specific user (Newbie, ML expert, ML enthusiast, or Domain expert) can use a product or design (in this case, AutoML) to achieve a defined goal effectively, efficiently, and satisfactorily (reference). This section is targeted at users with AutoML experience. Some common AutoML platforms include BigML and RapidMiner. A total of 16 participants with AutoML experience took part in this section. Fig. (7) shows the distribution of years of experience in using AutoML.

Usability scale and ISO Usability metrics were adopted to evaluate usability issues in autoML. A 5-point Likert scale was used for this measure. Details uncovered are discussed as follows:

Respondents’ Experience Levels with Automated Machine Learning Tools Based on Years of Practical Usage .

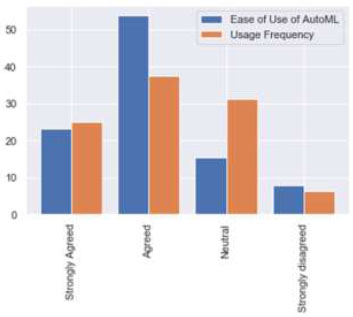

4.3.1. Ease of Use and Usage Frequency

Fig. (8) provides insights into participants' perceptions of the ease of use and frequency of use of AutoML. Regarding ease of use, 23.07% of participants strongly agreed that AutoML is easy to use, 53.8% agreed, 15.4% were neutral, and 7.8% strongly disagreed. In terms of usage frequency, 25% of users strongly agreed that they like to use AutoML frequently, 37.5% agreed, 31.25% were neutral, and 6.25% strongly disagreed. Interestingly, we observed a similar distribution at both extremes of the scale for ease of use and usage frequency, though some differences appeared in the middle range. This indicates that while ease of use is important, it does not necessarily guarantee that users will always prefer to use the system frequently. Further investigation into these differences in frequency and ease of use might reveal factors such as limited feature engineering modules and use cases, as well as restrictions in the explainability and interpretability of AutoML. Furthermore, an analysis of participants' programming language preferences revealed that 76.32% of them began their programming journey with Python. Based on this, we recommend that AutoML platforms be designed to generate Python code. This would enable beginners to view and learn from the underlying code behind their AutoML projects, further enhancing their understanding and learning experience.

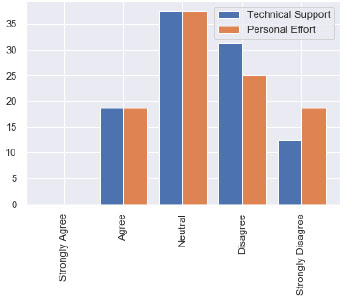

4.3.2. Technical Support

Fig. (9) displays the participants' responses regarding their need for technical support and the necessity to learn extensively to use AutoML effectively. The results indicate that 12.5% strongly disagreed, 31.25% disagreed, 37.5% were neutral, and 18.75% agreed on the need for technical support. Similarly, 18.75% strongly disagreed, 25% disagreed, 37.5% were neutral, and 18.75% agreed on the necessity to learn extensively before using AutoML. Interestingly, the ratio of participants who agreed and those who were neutral is the same for both the need for technical support and the need to learn extensively. This suggests that some existing AutoML platforms still require users to possess a certain level of technical knowledge to operate effectively. This finding contradicts the original purpose of AutoML, which aimed to simplify the process. Therefore, further research at the intersection of AI and HCI is needed to address this technicality and make AutoML more user-friendly. These findings align with the results of [35-37], which revealed that many current AutoML systems still rely heavily on human intervention.

4.3.3. Confidence

According to Sakpere [38], confidence is key to the continuation of a task or the engagement in a new environment or learning paradigm. As a result, in this research, we sought to understand the confidence level of users in using AutoML. 18.75% strongly agreed they had a high level of confidence in using AutoML, 62.5% agreed, 12.5% were indifferent, and 6.25% disagreed.

Comparison of Perceived Ease of Use and Usage Frequency of Automated Machine Learning Tools among Respondents.

Respondents’ Perceptions of Technical Support Availability and Required Personal Effort in Using Automated Machine Learning Tools.

4.3.4. User Experience

Based on the responses gathered, users' experiences with AutoML can be summarized as follows:

- Easy understanding of machine learning concepts without coding: Users found that AutoML offered a simplified way to grasp the fundamental concepts of machine learning without the requirement for extensive coding knowledge.

- Eliminating complexity in machine learning project workflow: Users appreciated how AutoML streamlined the workflow of machine learning projects, removing complexities and making the process more accessible and manageable.

- Users expressed satisfaction with the efficiency of AutoML, as it allowed for the development of models in a short timeframe and with minimal effort compared to traditional approaches.

Flexibility in using different learning algorithms: Users found it intriguing that AutoML provided the flexibility to experiment with various learning algorithms without necessitating significant alterations to the underlying code structure. This flexibility enhanced their ability to explore different approaches and optimize their models.

Overall, users reported positive experiences with AutoML, emphasizing its capability to simplify the understanding of machine learning concepts, streamline project workflows, and facilitate efficient model development with flexibility in algorithm selection.

4.4. Learning via AutoML

The findings from the survey regarding participants' opinions on AutoML and its role in machine learning, summarized in Table 1, are explained as follows:

| Insight Questions | Strongly Agree (%) |

Agree (%) |

Neutral (%) |

Disagree (%) |

Strongly Disagree (%) |

|---|---|---|---|---|---|

| Is AutoML Ideal for Learning? | 12 | 37.5 | 18.75 | 12 | 18.75 |

| Is AutoML better than Python? | 18.75 | 43.75 | 6.25 | 12.5 | 18.75 |

| Should AutoML be combined with other Programming languages? | 37.5 | 37.5 | 12.5 | 6.25 | 6.25 |

| I will encourage a newbie to use autoML for learning machine learning techniques | 6.25 | 43.75 | 6.25 | 31.25 | 12.5 |

| AutoML is best suitable for developing business solutions and not necessarily for educational/learning purposes | 18.75 | 43.75 | 18.75 | 12.5 | 6.25 |

- General perception of AutoML for learning ML: 12% of participants strongly agreed that AutoML is an ideal tool for learning ML, while 37.5% agreed, 18.75% were neutral, 12% disagreed, and 18.75% strongly disagreed. This indicates a mixed opinion among users regarding the suitability of AutoML for learning ML.

- Comparison of AutoML with Python for learning ML: When comparing AutoML to Python, 18.75% of participants strongly agreed that AutoML is easier to use for learning ML, 43.75% agreed, 6.25% were neutral, 12.5% disagreed, and 18.75% strongly disagreed. These results suggest that while some participants find AutoML more user-friendly than Python, others still prefer traditional programming languages like Python for learning ML.

- Combination of AutoML with a programming language for learning ML: Regarding the combination of AutoML with a programming language for learning ML, 37.5% of participants strongly agreed that AutoML is best used alongside a programming language, another 37.5% agreed, 12.5% were neutral, 6.25% disagreed, and 6.25% strongly disagreed. This indicates that many participants recognize the importance of combining AutoML with a programming language to enhance their learning experience.

- AutoML Suitability for Newbies: Regarding the recommendation of AutoML for newbies to learn machine learning, 6.25% of participants strongly agreed that AutoML is suitable for beginners, 43.75% agreed, 6.25% remained neutral, 31.25% disagreed, and 12.5% strongly disagreed. This suggests that many participants do not view AutoML as an effective platform for newcomers to understand the intricacies of machine learning coding.

- AutoML application domain suitability: We further investigated whether AutoML is suitable for business or educational purposes. 18.75% strongly agreed that AutoML is suitable for business purposes but not for educational purposes, another 43.75% agreed, 18.75% were indifferent, 12.5% disagreed, and 6.25% were uncertain.

4.5. Evaluation of Computational Capabilities of Cloud-Based/autoML Tools Compared to Generic ML Tools

The assessment of the computational capabilities of cloud-based/AutoML tools compared to generic ML tools can be summarized as follows:

- Training Time on cloud-based Tools is faster.

Out of 16 respondents, 31.3% agreed and 43.8% strongly agreed that the training time on cloud-based tools is faster. However, 12.5% disagreed, while another 12.5% were indifferent or neutral. This is summarized in Table 2.

- Reproducibility is Better Using Cloud-based Tools

In the result of 16 responses, 13 participants (81.3%) agreed that reproducibility, which is the ability to replicate an ML operation carried out and achieve the same results as the original work, is better using cloud-based tools. Two participants (12.4%) strongly agreed, nobody disagreed, while one person was indifferent.

| Evaluation Metrics | Fast (%) | Faster (%) | Slow (%) | Indifferent (%) |

|---|---|---|---|---|

| Training Time | 31.30 | 43.80 | 12.50 | 12.50 |

| Reproducibility | 81.30 | 12.50 | 0.00 | 6.30 |

- Classification Algorithm Performs Better

Out of 16 responses from users of classification algorithms in AutoML, 6 participants (37.5%) agreed that the classification algorithm in AutoML performs better in correctly classifying an object. Additionally, 3 participants (18.8%) strongly agreed, 3 participants disagreed, and 4 participants were indifferent, as shown in Table 3.

| Evaluation Metrics | Fast (%) | Faster (%) | Slow (%) | Slower (%) | Indifferent (%) |

|---|---|---|---|---|---|

| Classification Performance | 37.50 | 18.80 | 18.80 | - | 25.00 |

| Confidentiality (Security) | 37.50 | 18.80 | 31.30 | - | 12.50 |

| Tuning & Optimization | 18.80 | 6.30 | 25.00 | 25.00 | 25.00 |

- Confidentiality (Security)

Recent developments have raised privacy concerns regarding the use of AutoML. These concerns range from the potential leakage of training data to inference attacks. Consequently, in this study, we aimed to understand users' experiences and confidence regarding AutoML's ability to ensure the confidentiality of training data compared to traditional platforms or approaches. Among 16 responses, 6 participants agreed that the confidentiality of training data in AutoML is better, 3 strongly agreed, 2 were indifferent, and 5 disagreed.

- Hyperparameter Tuning and Optimization

In machine learning, hyperparameter tuning or optimization is essential for selecting a set of optimal parameters for a learning algorithm. This process is often regarded as tedious, and the traditional brute-force approach has proven inefficient. It is believed that AutoML is beneficial for identifying optimal hyperparameters. Consequently, during the usability study, we aimed to understand users' experiences regarding the use of AutoML for finding optimal hyperparameters, especially compared to the traditional approach. One participant strongly agreed that the use of AutoML performs better for finding optimal parameters, three people agreed, four were indifferent, four disagreed, and four strongly disagreed.

4.6. Design Recommendations

After identifying usability issues in AutoML, we engaged participants to provide suggestions for enhancing its design and the factors that should be considered during development.

4.6.1. Enhancing Usability and Acceptance of AutoML: Design Insights

The insights gathered from participants regarding design suggestions to enhance the usability and widespread acceptance of AutoML can be categorized into the following key themes:

4.6.1.1. User Interface and Ease of Use

Participant 26 emphasizes the need for a user-friendly interface that simplifies the upload of data and the presentation of trained data. The goal is to enable individuals new to machine learning to understand and interpret training outcomes independently, without relying on professional assistance.

Participant 19 offers a comprehensive list of recommendations: ensuring the explainability and interpretability of the system, crafting a user-friendly interface, providing robust documentation, allowing for customization, ensuring flexibility, and prioritizing privacy and security considerations.

4.6.1.2. Enhancements and Technical Adjustments

One participant, represented by Participant 31, suggests adopting a Python-based platform with the necessary plugins. The ease of installing libraries should be clearly communicated, as difficult installations of machine learning libraries could discourage users.

A call for more advanced feature engineering modules is made by Participant 3. They highlight that existing AutoML solutions often lack comprehensive feature engineering capabilities, which are crucial for optimizing the performance of machine learning models. Additionally, Participant 13 emphasizes the importance of enhancing explainability and interpretability features within AutoML systems, underscoring the need for transparent model outcomes.

4.6.1.3. Accessibility and Scope

Participant 14 emphasizes the importance of evaluating the quality of unsupervised learning results, particularly when a benchmark for comparison is absent. Participant 20 highlights the limited applicability of AutoML due to the dynamic nature of problems encountered in everyday scenarios.

These insights provide valuable guidance for refining the design of AutoML, improving its usability, and broadening its applicability across various contexts.

4.6.2. Considerations in AutoML Design

Participants also identified factors to consider in autoML design. These include:

4.6.2.1. .Handling Diverse Data Types

Given the prevalence of common data types, AutoML should also address complex data types to ensure versatility in its applications.

Prioritise user-friendliness, thorough documentation, and well-commented code to improve the user experience.

4.6.2.2. Synergy between HCI and AI Methodologies

The feedback and comments provided are as follows:

Participant ID Comments

P31 Implementing a robust user interface and an interactive platform is pivotal in elevating the user experience.

P26 Promote clear comprehension and meaningful interpretation of trained data by focusing on intuitive design.

P21 Center the design around optimizing the user experience.

P22 Yes, particularly for individuals less familiar with coding. This AutoML could significantly assist them in harnessing its capabilities

Unknown Absolutely, considering the ongoing shift towards AI and data science in the realm of computing. Aligning HCI practices with this trend can result in an enhanced platform that instills confidence.

4.7. Limitations and Future Work

Our participants are primarily Africans, with the majority being male. We also have a higher percentage of participants who are Machine Learning enthusiasts with an intermediate level of programming. Additionally, our research did not determine whether the participants were active or inactive users of AutoML. Soon, we hope to advance this work by engaging frequent users of various AutoML environments to assess their level of satisfaction with such platforms and identify areas of concern.

CONCLUSION

In our study, participants suggested that one of the design considerations to make AutoML more usable and widely acceptable is customizability, which is vital for real-world problem-solving. For example, “difficulty in installing machine learning libraries to make operations dynamic can put a user off.” “Currently, most AutoML have limited feature engineering modules to achieve better performance for machine learning models” (P31) (P19) (P3). Our research discovered from participants’ responses that AutoML is too ‘Blackboxed’. The entire process of operation within is not transparent (P26). Furthermore, we found that checking intermediate results and communicating data and models with teams and stakeholders is not clear. “More effort should be placed on explainability and interpretability of the AutoML” (P13) (P19) (P26). From participants’ reports on the involvement of HCI in the AutoML/AI field, our study identified the need to address the problem of user experience by providing a good user interface and a proper interactive platform to boost user experience (P21) (P31). Another problem that our study discovered is that AutoML is limited in operation in some domains. Part of the participants’ responses is “I think AutoML has limited Use Cases due to the ever-changing problems we solve every day” (p 20). Lastly, our research revealed that many users have high expectations for AutoML, with most participants agreeing that it could ease the learning process of machine learning. This underscores the potential of AutoML in bridging the AI skills gap and enhancing productivity.

Future research can conduct longitudinal studies on learning outcomes to explore how AutoML affects long-term ML proficiency by tracking users’ progression from beginners to experts, comparing AutoML-assisted learning with traditional coding-based approaches.

Additionally, there is potential to develop and evaluate AutoML tools tailored for specific purposes and applications to overcome limitations in feature engineering and interpretability.

Furthermore, hybrid systems that enable users to iteratively refine AutoML-generated models can be explored. This will help balance automation with expert oversight to enhance flexibility and trust. Similarly, emerging technologies can be integrated to streamline model deployment for resource-constrained, self-powered services.

By adhering to these guidelines, future efforts can further promote the democratization of AutoML while ensuring it meets the diverse needs of users across technical, geographical, and demographic contexts.

AUTHOR CONTRIBUTION

The authors confirm their contribution to the paper as follows: A.S. came up with the concept, methodology, and H.A. and A.A. worked on the modeling, O.A. and S.A. worked on the literature, and all the authors worked on the Introduction, O.A. worked on the abstract, summary, and proofreading.

All authors reviewed the results and approved the final version of the manuscript.

ETHICS APPROVAL AND CONSENT TO PARTICIPATE

The ethical approval number for this research is given by Bowen University Research Ethical Board as BUREC/COCCS/CSC/0002. The ethical clearance is attached to this submission.

HUMAN AND ANIMAL RIGHTS

All procedures performed in studies involving human participants were in accordance with the ethical standards of institutional and/or research committee and with the 1975 Declaration of Helsinki, as revised in 2013.

CONSENT FOR PUBLICATION

Informed consent was obtained from all subjects and/or their legal guardian(s).

AVAILABILITY OF DATA AND MATERIALS

The datasets generated and/or analysed during the current study are available in this Google link: https://docs.google.com/spreadsheets/d/1Nq0NIRIhK7lKA42F3ecz4YAuNeIEvlpYP3oCFqZjHUY/edit?usp=sharing

ACKNOWLEDGEMENTS

Declared none.